HCI Diary: iOS Notifications

October 22, 2011 | Comments So, one part of the MSc I'm sat on at Sussex is Human-Computer Interaction, which I'm being tutored in by Graham McAllister; and he's gently floated the possibility of our writing online about the course and our observations throughout it. So here goes; first up, we've been asked to look at iOS 5 from an HCI perspective.

So, one part of the MSc I'm sat on at Sussex is Human-Computer Interaction, which I'm being tutored in by Graham McAllister; and he's gently floated the possibility of our writing online about the course and our observations throughout it. So here goes; first up, we've been asked to look at iOS 5 from an HCI perspective.

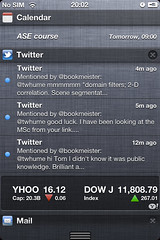

That's quite a big ask, so I've spent a few minutes running through one small but obvious new feature: the Notification Center which can now be pulled down from the top of the screen, and allows applications to request attention or leave quick-to-access information lying somewhere visible or constantly accessible.

I'll not mention the shocking similarity between this approach to notifications and the pre-existing Android feature; as Zach Epstein points out in a good comparison, WebOS at least demonstrated that there's room for something different, maybe even better.

Some observations about the Notification Center (NC) bar on iOS5, looking at it up close:

- It's easily found with the finger: Fitts's Law in good effect here, I can pull down from anywhere above the tops of the iPhone screen and drag the NC bar into visibility. It's quite helpful that the front of the iPhone is a single flat surface: there's no ridges to annoy whilst you run your finger across them, the whole movement of pulling down the NC bar;

- It's immediately useful: you can pull down the NC bar just a fraction and start seeing your most recent notification, then quickly dismiss. No need to wait for the whole thing to appear, or to completely obscure what you are otherwise doing with your phone;

- If you part-show the bar and then take your finger off the screen, there seems to be a subtle bit of logic going on to decide whether the bar should snap down and take up the whole screen, or snap up and disappear. As far as I can tell it works like this:

- If a single item in the NC bar hasn't been made visible, snap up and hide it on release (the logic being that if you've not looked at anything useful and have taken your finger off the screen, you're probably not interested in the contents of the NC);

- If more than a single item has been made visible and the last motion of your finger was downwards (i.e. you were opening it and had down so more than a minimal amount), keep opening the NC;

- Otherwise, snap it closed - you were shutting it anyway, right?

- Clicking on an item in the NC takes you through to the app that put the notification there, providing quick access to apps which need your attention;

- Other apps (weather and stocks, initially) sit permanently in the NC, at the top and bottom respectively;

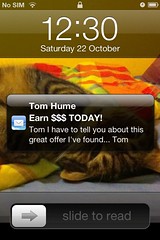

The other aspect to notifications is their appearance on the idle screen of the phone; again, configurable on a per-app basis. This brought back memories of a chat with a customer years ago; they were a mobile network operator and talked about designing for various personas, one of which was having an affair - in order to consider some interesting edge cases around privacy and floating potentially damaging messages onto the idle-screen.

The old "alert style" notifications are still available to users, on an app-by-app basis: an uncharacteristic abdication of UI responsibility from Apple, more characteristic of Android.

Clearly useful stuff - I personally found the old notification system of modal pop-up dialog boxes irritating, as did many people. Possible areas for improvement:

Clearly useful stuff - I personally found the old notification system of modal pop-up dialog boxes irritating, as did many people. Possible areas for improvement:

- I can't seem to be able to dismiss individual notifications (though I can dismiss all from a specific app). With many apps populating the NC, it quickly becomes a long and crowded list; but having the ability to remove individual entries might engender a behaviour of "checking off" old notifications, making the whole feature more disruptive to everyday usage of your phone than it currently is;

- I can't do things from within notifications (e.g. reply to a Tweet), all I can do is sick on them to go through to the app which created them - though individual notifications can lead to screens within an app, so clicking on a Tweet in the NC bar takes me through to that tweet within Twitter. It would be nice to be able to perform basic operations on notifications, and there's clearly potential for interactivity there already: the stock application lets you drag the horizontally scrolling ticker around from within the NC bar;

Setting fire to SMS and pushing it out to sea

October 19, 2011 | Comments"How long before iChat (notably missing from the iPhone to date) starts to compete with SMS?", I asked. 16 months, it turns out.

My completely atypical mobile-geek multiple-device-owning lifestyle has thrown an abnormal amount of light onto iMessage, which is clearly Apple starting to replace text messaging in a characteristically subtle way. I'm actually using a Galaxy S2 day-to-day, but I have an iPhone 4 kicking about which I upgraded to iOS 5 the other day. As part of the upgrade process, I had to insert my SIM card, and it appears that during the process Apple recorded my mobile number and associated it with that device.

The upshot is that anyone sending me a message from their iOS 5 device now punts me (without realising it) an iMessage rather than the SMS they would previously sent. iOS seems to detect if you're sending a message to someone else Apple thinks owns an iOS device, and does this automagically. They also downplay the fact that this is going on; the only reason I'm noticing this is that the iMessage goes to the iPhone which sits at my bedside being The Worlds Most Pretentious Alarm Clock, and my iPhone-owning friends think I've stopped talking to them.

It appears that you can't choose to send a text message, but have to deactivate iMessage completely in your settings to get around this. I'm actually a little impressed by how Apple are doing it: it's entirely possible to not notice that they've replaced a core part of mobile communications. It's a little annoying in my case, but I can see that asking which of umpteen different ways (SMS, MMS, Email, etc) I'd like to send a given contact a message, every time I message them, would just be irritating… and, I note, exactly the kind of thing most other device manufacturers have done for years.

Extended Selves

October 13, 2011 | CommentsTo London, and a lecture at the LSE by Katalin Farkas on Extended Selves.

Katalin is a philosophy professor, and presented an interesting theory: the mind is composed of two sets of features, the stream of consciousness (everyday meanderings that bubble up and away) and what she calls "standing features": the long-held beliefs, desires, etc., which define us. When we're asleep, the former shuts off, the latter persists: we are still ourselves when unconscious.

So her thesis (the Extended Mind, or EM thesis) is that we are a set of beliefs or dispositions, and where these dispositions are stored makes no difference to whether they are beliefs: a man with poor memory who documents his beliefs in a notebook and retrieves them from there. This is controversial because the notebook is outside his brain ("spacial extension", as Katalin referred to it), but we accept prosthetic limbs, so this shouldn't be a problem. And what if the notebook were inside the cranium, would that make a difference?

How far can this go? Consider a student who passes her exams with the aid of a 24/7 consultant: she understands what she's writing but didn't originate it. The consultant is effectively an external prop. This might be morally problematic today, but many of us outsource some of our beliefs in specialist areas to specialists: you could consider accountants to be a repository of outsourced beliefs.

If the EM thesis is correct, the value of certain types of expertise may change over time. We see this happen today - like it or not! - with spelling, or driving directions. What it takes to be an expert changes, with the addition of expert devices. Katalin spoke about "diminished selves", which troubled me slightly: I couldn't help wondering if she would have considered our species to have diminished when we invented language, or the written word, and could start transmitting, storing and outsourcing our knowledge.

And I also observed that the problem many people have with digital prostheses might relate to lack of control over them: they require electricity or connectivity to function; they don't self-maintain as the human body heals, and the data flowing through them might be subject to interception or copying.

Interesting stuff, and the evening ended with a quite lively discussion between the audience and Professor Farkas...

Vexed acquires Future Platforms

October 10, 2011 | CommentsBig news from me: Future Platforms has been acquired by Vexed Digital.

Vexed is the agency that my old boss, Richard Davies, set up a few years after leaving Good Technology, and the core team there is the old team from GT. My first foray into mobile in 1999 was when I set up GT Unwired, a division of GT which Just Did Mobile, so this feels a little bit like a return home for FP.

Vexed are talented and experienced folks with some great customers and stronger management skills than I've been able to bring to FP. They also know us well - Richard has sat on our advisory board and provided a shoulder to cry on, on occasion. I'm looking forward to seeing what we can do together. The is *definitely* going to be the year that mobile takes off ;)

We're going to start by harmonising where we can (sharing tools and processes, mainly), and are already collaborating on some pleasantly large projects, but FP will remain a separate entity and brand - based in Brighton and employing everyone who works there today. We'll also have facilities in London available to us, which might please those of our customers who have trouble enjoying the Brighton/Victoria line.

As for me: I'm going to carry on working for the business part time, and am filling otherwise empty hours with a return to academia: last week I started on the Advanced Computer Science MSc which Sussex University have begun offering this year.

The ride's not over yet, but it feels remiss of me not to thank everyone who's worked hard to get FP to this point: that'll be everyone who's worked for us, everyone who's still there, and our advisors over the years. In particular I should call out my original co-conspirator Mr Gooby (who shares the blame for FP) and Mr Falletti, who's kept me upright and out of trouble for the last 6 years.

app.ft.com, and the cost of cross-platform web apps

October 05, 2011 | CommentsOne of the most interesting talks at OverTheAir was, for me, hearing Andrew Betts of Assanka talk about the work he and his company had done on the iPad web app for the Financial Times, app.ft.com.

It's an interesting piece of work, one of the most accomplished tablet web-apps I've yet seen, and has received much attention from the publishing and mobile industry alike. I've frequently heard it contrasted favourably with the approach of delivering native apps across different platforms, and held up as the shape of things to come. Andrew quickly dispelled any notion I had that this had been a straightforward effort, by going into detail on some of the approaches his team had taken to launch the product:

- Balancing of content across the horizontal width of the screen;

- Keeping podcasts running by having HTML5 audio in an untouched area of the DOM, so they'd not be disturbed by page transitions;

- Categorisation of devices by screen width;

- Implementing the left-to-right carousel with 3 divs, and the detail of getting flinging effects "just so";

- Problems with atomic updates to app-cache (sometimes ameliorated by giving their manifest file an obscure name);

- Using base64 encoded image data to avoid operator transcoding;

- How to generate and transfer analytics data with an offline product;

- Difficulties handling advertising in an offline product;

- Problems authenticating with external OAuth services like Twitter or Facebook, when your entire app is a single page;

- Horrendous issues affecting 8-9% of iPhone users, who need to reboot their phones periodically to use the product (I picked this up from a colleague of Andrew's in a chat before the talk);

- Lack of hardware-accelerated CSS causing performance problems when trying to implement pan transitions on Android;

- etc.

All of this really drove home the amount of work which had gone into the product: app.ft.com took a full-time team of 3 developers at Assanka 8 months to launch on iPad, and that team a further 4 months to bug-fix the iPad and ready for distribution to Android tables. (It's not available for Android tablets just yet, but that's apparently due to a customer-service issue: the product is there).

Seeing the quality of the product, I've no doubt that the team at Assanka know their stuff; and given the amazing numbers the FT report for paying digital subscriptions and their typical pricing for subscriptions, I'm sure the economics of this have worked well for them.

But the idea that it takes 24 developer-months to deliver an iPad newspaper product to a single platform using web technologies is, to me, an indication of the immaturity of these technologies for delivering good mobile products. By comparison, at FP we spent 20 months launching the Glastonbury app across 3 completely different platforms and many screen sizes; but this 20 months included two testers and two designers working at various stages of the project; development-wise I'd put our effort at 13 months total. Not that the two apps are equivalent, but I want to provide some sort of comparative figure for native development. I'd be very interested if anyone can dig out how long the FT native iPad app took…

And the fact that it's taken Assanka a further 12 man-months to get this existing product running to a good standard on Android is, to me, an indication of the real-world difficulties of delivering cross-platform app-like experiences using web technologies.

It strikes me that there's an unhelpful confusion with all this web/native argument: the fact that it's easy to write web pages doesn't mean that it's easy to produce a good mobile app using HTML. And the fact that web browsers render consistently doesn't mean that the web can meet our cross-platform needs today.

Adam Cohen-Rose took some more notes on the talk, here.

(At some point in the coming months I promise to stop writing about web/native, but it keeps coming up in so many contexts that I still think there's value in posting new insights.)